Impact factor (IF) can be defined as the frequency with which a particular number of articles from a scientific journal are cited within the last few years. Impact factors help reveal the importance of a journal. Impact factors can also be used to rank the available literature in a specific field of research (“What is considered a good impact factor?” 2017). For example, if any selected articles have been cited numerous times, the journal that has published those articles is more likely to be highly ranked.

Medical research is a challenging path characterized by multiple milestones. Scientific publishing is often the last obstacle researchers need to overcome. In the end, reporting the findings, including negative results, fosters interoperability and transparency. To measure research success, impact factors have become vital indicators of scientific progress.

The calculation of impact factors follows clear rules, and it’s usually done on a two-year basis (“Measuring Your Impact: Impact Factor, Citation Analysis, and other Metrics: Journal Impact Factor (IF)” 2018). The impact factor of a publication can be calculated via the following formula: A divided by B. In other words, to calculate the 2018 impact factor of a journal, researchers will need two factors: A) the total number of 2018 citations to all of the papers published by a given journal in 2016 and 2017; and B) the total number of citable research pieces published by the same journal between 2016 and 2017 (“Impact factors: arbiter of excellence?” 2003).

It’s interesting to mention that Eugene Garfield initially created the impact factor calculation to evaluate all articles available for listing in Current Contents. Note that as impact factors cover the previous two years (before the year of interest), these indexes show the influence a journal may have during the first two years of publication.

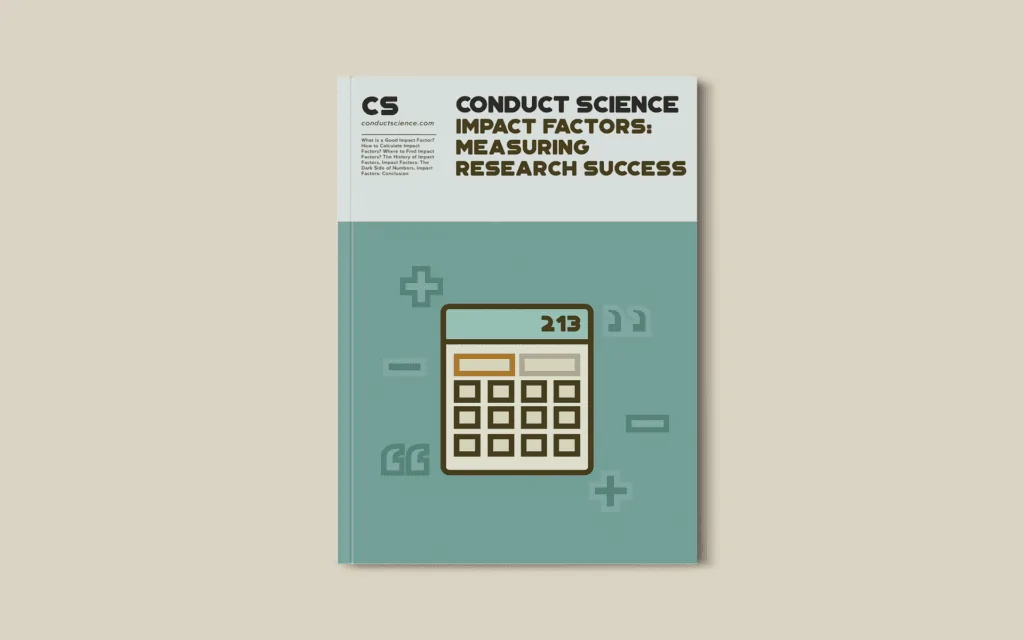

One of the most prominent places that provide impact factors and ranking is the Journal Citation Reports (JCR) (“What is considered a good impact factor?” 2017). The platform covers articles in various areas of research, such as science and technology. It includes various indicators, such as cited half-life, source data listing, different categories, publisher information, citation counts, and of course, impact factors. Eigenfactors can also be employed, which can show how often a journal is likely to be used by researchers. The JCR database tracks the impact factors of more than 12,061 journals. Impact factors range from 0 to over 10. Note that in 2016, only 213 journal titles, which is around 2% of all articles, received a high ranking, of 10+ (“What is considered a good impact factor?” 2017).

Impact factors and number of journals

As a matter of fact, the online procedure is pretty straightforward. For instance, a researcher can enter the title of interest in the JCR base, and compare scores and rankings (“How do I find the impact factor and rank for a journal?”):

Another alternative tool is the SCImago Journal Rank (SJR) (“Measuring Your Impact: Impact Factor, Citation Analysis, and other Metrics: Journal Impact Factor (IF)” 2018). The tool uses the information stored in the broad platform Scopus, which contains more than 15,000 journals, 4,000 publishers from all over the world, and 1,000 open sources.

On top of that, every year, a report with impact factors is published by Clarivate, formerly known as Thomson Reuters.

In today’s digital era, research and technology mix into one. It’s a fact that digital solutions benefit science. From online recruiting to healthcare apps, researchers and patients profit from the implementation of technology. However, people often forget that only a couple of decades ago the only way to access research sources was via literature search of printed pieces. Interestingly enough, journal impact factors were originally designed to help librarians track literature searches and purchase new journals. To be more precise, impact factors were initially designed as bibliometric indicators by the Institute for Scientific Information (ISI) (“Impact factors: arbiter of excellence?” 2003). The first time impact factors appeared in practice was in 1963. Back then, impact factors were widely used in libraries to track subscriptions and record shelving data. However, many issues still have no answer: is the impact factor index relevant in libraries when many individuals reshelve books by themselves?

Slowly, impact factors have become vital indicators that have started to influence job applications and shape funding decisions. Although these indexes do not represent the quality of a particular article, impact factors are widely used to assess literature and report excellence. In fact, many investigators provide impact factors next to their work when applying for a job or funding (“Impact factors: arbiter of excellence?” 2003). However, experts and committees agree that the focus should be on the individual proposal or work – not on the impact factor (“Journal impact factors” 2013). In the end, just like with shelving data, many research pieces are reviewed without being cited. As a result, people have started to compare impact factors and online hits.

Impact factors are essential in research and publishing. Although impact factors help experts systematize journals, citations, and searches, many issues arise from the implementation of impact factors.

First of all, some experts claim that impact factors should not be used to measure the quality of both journals and individual research (“Impact factors: arbiter of excellence?” 2003). In fact, one of the main suggestions stated by the San Francisco Declaration on Research Assessment goes, “Do not use journal-based metrics, such as Journal Impact Factors, as a surrogate measure of the quality of individual research articles, to assess an individual scientist’s contributions, or in hiring, promotion, or funding decisions.” An effective way to measure the quality of any individual work of researchers is to evaluate the number of citations for each of their papers. In the end, some authors publish in less known journals due to the specifics of their work.

Also, we should not forget that not all research titles are tracked in the JCR database. Even the existing ones are prone to misleading results. For instance, it’s been proven that around 15% of the articles in a given journal account for half of their citations, which means that high-impact journals are cited more frequently. It’s interesting to mention that the founder of ISI, Eugene Garfield, said that a paper cited more than 100 times can be called a citation classic (“Journal Impact factors,” 2013). But does high citation represent quality?

Another problem that may arise is related to timing. Usually, new journals must wait for a record of citations before their inclusion in the JCR database, for instance. Since impact factors cover two years prior to calculation, the field of work should also be considered. Some research areas are growing fast, and changes happen on a regular basis. On the other side, the impact factors of certain journals and areas do not differ significantly from year to year (“Journal Impact factors,” 2013). Again, numbers are different for each field of research. For psychology, for instance, the impact factors are low compared to other areas – simply because the publication process is relatively slow (“Journal Impact factors,” 2013).

Talking about time, the long-term and the short-term impact of a journal also matters. Note that the half-life of a publication plays a crucial role in determining the impact factor of a journal. For example, it’s been proven that the half-life for physiology is usually longer than the half-life for molecular biology, which means that the scores for physiology will eventually increase during the years (“Impact factors: arbiter of excellence?” 2003).

The citation patterns also vary between fields. For instance, dual citation formats can affect the score of a journal or an article. Surprisingly, experts have revealed that 7% of all references are cited incorrectly, especially in pieces with dual volume-numbering formats. To set an example, before 2000, the citation used by the American Journal of Physiology couldn’t be recognized by the ranking system of ISI (“Impact factors: arbiter of excellence?” 2003).

There are also issues with the actual numbers when calculating impact scores (“Journal Impact factors,” 2013). For instance, in 2012, Psychological Science got an impact factor of 4,543. The number of cited articles (published in 2010 and 2011) was 2,344. The number of published articles in it was 516. Since these are whole numbers, experts wonder why a precision of up to three decimals is needed, and rounding is ignored. Although decimals give an impression of change, there isn’t a significant difference.

When it comes to calculation and statistics, we should note that impact factors represent the mean impact. Since the mean is also crucial, experts must recognize some statistical rules. For example, if the distribution is skewed, the mean can reveal a measure of a trend; however, this trend can be affected by outliers, such as highly cited articles (“Journal Impact factors,” 2013). As a matter of fact, the distributions of most papers are skewed positively: when only a few are often cited, this can increase the impact factor of the whole journal. It’s the same for researchers – some authors are, but this trend is based only on a fraction of their work. Take the Annual Review of Psychology – the team picks only popular and hot news, so it’s normal for it to be cited more in that particular year.

It’s not only the frequency but the nature of the publication. Note that the number of articles in any journal and their length also affect the distribution. A journal that publishes many short empirical studies can get a lot of hits but no citation. On the other hand, one highly-cited paper in a small journal will affect the factor significantly (“Journal Impact factors,” 2013).

Last but not the least, the readership is a paramount factor. Some journals are distrusted for free and may reach more experts. For the Association for Psychological Science, the indexes may be skewed by the size of the readers. As a result, many authors may aim to publish only to gain readers (“Journal Impact factors,” 2013).

Impact factors are a popular measure of research success. They indicate the frequency with which selected articles in a given journal have been cited. Based on them, journals can be ranked according to popularity and quality, especially when it comes to medical publications (“Impact factors: arbiter of excellence?” 2003). Note that empirical journals often have lower impact factors that review journals.

However, as explained above, many issues may arise. Since impact factors provide information about the frequency of citation to articles published in a given journal, other factors should be considered: the field of work, length, readership, mean impact, and citation patterns. As a result, many experts disagree with the popular practice of using impact factors as indicators of the quality of individual work. In fact, researchers and committees have started to implement other measures of citation that can be used as an alternative to impact factors.

Frank, M. (2003). Impact factors: arbiter of excellence? Journal of the Medical Library Association, 91 (1).

How do I find the impact factor and rank for a journal? Retrieved from https://www.hsl.virginia.edu/services/howdoi/hdi-jcr.cfm

Journal Impact factors. (2013, September). Retrieved from https://www.psychologicalscience.org/observer/journal-impact-factors

Measuring Your Impact: Impact Factor, Citation Analysis, and other Metrics: Journal Impact Factor (IF) (2018, January 17). Retrieved from https://researchguides.uic.edu/if/impact

What is considered a good impact factor? (2017, August 08). Retrieved from http://mdanderson.libanswers.com/faq/26159